Auditing artificial intelligence

Guidance for internal auditors starting to think about how to audit artificial intelligence (AI). Frameworks within the section on challenges and solutions may also be of use to members with more experience in this subject area.

AI is a complex emerging technology with far-reaching consequences across a wide range of industries and professions. Adoption rates vary but as industries take AI on board, challenges begin to arise in respect of auditing the technology and its business impact as internal auditors seek to provide assurance around it.

Defining AI | Everyday examples |What might auditing AI look like

Challenges and solutions for internal auditors | AI and internal audit

The Oxford dictionary defines it as “the theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

In comparison with traditional software, AI does not follow a set of predetermined rules as these typically inhibit the technology’s ability to learn and adapt. It covers a host of specialised technologies including:

- Machine learning (ML) – the algorithms that parse data sets and learn to make informed decisions. The computer program learns from experience by performing tasks and seeing how task performance improves with experience. ML algorithms can work efficiently on low-end machines. In ML, problems are broken down into several parts and each part is solved individually.

- Deep learning (DL) – this branch of AI tries to closely mimic the human mind. DL uses an end-to-end problem-solving approach, based on artificial neural networks that require numerous parameters and specialist high-end technology to operate.

- Natural language processing (NLP) – NLP enables computers to understand, interpret and manipulate human language, combining AI and linguistics to communicate with machines using natural language. Current uses include voice-activated platforms and chatbot technology.

- Computer vision – In simple terms, computer vision trains computers to understand and interpret the visual world, obtaining information from images or multi-dimensional data. Computer vision helps machines to identify and classify objects and then react to what they “see”, working on image acquisition, image processing and image analysis and understanding.

- Explainable AI (XAI) – XAI is a set of processes and methods that allows human users to understand and trust the results and output created by machine learning algorithms. It is used to describe an AI model, its expected impact and potential biases and helps to characterise model accuracy, fairness, transparency and outcomes in AI-powered decision making.

AI is not futuristic. The average person interacts with an AI programme every five minutes!

| Chatbots (online customer support) | Traffic alerts on Google Maps | Predictive text |

| Speech recognition software | Tagging on social media | Purchase/Ad recommendations |

| Video surveillance | Self-drive cars | Virtual/digital assistants |

| Real-time dynamic pricing | Biometric recognition | Email filtering (spam) |

| E-payment fraud identification | Autocorrect | Copywriting /Editing |

Check out the article on ChatGPT in Audit & Risk

What might auditing AI look like?

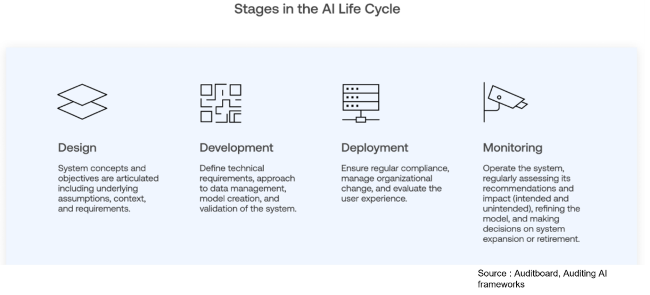

Internal auditors can take comfort. According to experts at Auditboard, the lifecycle of AI is a familiar one from a process perspective. Assurance may be required at any stage, end-to-end, or overarching in terms of governance and security for instance.

It is not an option to wait until there is clarity and certainty before starting to provide assurance but as always be wary of providing false assurance.

ISACA, a leading source of information for internal auditors identify areas of AI oversight in the diagram below. The headings are familiar governance themes that internal audit can provide assurance over.

Examples of introductory engagements might include:

- working with colleagues in IT to list all AI applications being used and risk assess them.

- audit business critical algorithms, depending on the application, whilst not AI, it is a credible entry point to build confidence and expand from.

- audit of 1st and 2nd line activities, what are they doing, how are they identifying and managing AI risk, leverage their operational knowledge and supplement it with research, expert advice etc.

Challenges and solutions for Internal Audit

It is vital for internal audit to recognise that AI is a rapidly developing area where technology, standards and legislation are evolving. Taking advantage of AI opportunities means that an organisation often has to set its own values, expectations and standards beyond what is required in a geography or industry.

The following table of challenges and solutions is taken from ISACA’s Auditing AI publication.

|

Maturity level |

Key characteristics |

|

1. Immature auditing frameworks and/or lack of understanding regarding regulations specific to AI |

Adopt and adapt existing frameworks and regulations |

|

2. Limited precedents for AI use case |

Explain and communicate proactively about AI with stakeholders |

|

3. Uncertain definitions and taxonomies of AI |

Explain and communicate proactively about AI with stakeholders |

|

4. Wide variance among AI systems and solutions |

Become informed about AI design and architecture to set proper scope |

|

5. Emerging nature of AI technology |

Become informed about AI design and architecture to set proper scope |

|

6. Lack of explicit AI auditing guidance |

Focus on transparency through an iterative process |

| 7. Lack of strategic starting points |

Involve all stakeholders [Discuss AI with the Audit Committee to ensure they have an understanding of the key risks across the organisation] |

|

8. Possibly steep learning curve for the AI internal auditor |

Become informed about AI design and engage specialists as needed |

|

9. Supplier risk created by AI outsourcing to third parties |

Document architectural practices for cross-team transparency |

Barriers and enablers to assurance was also the subject of a 2022 report by the UK government’s Centre for Data Ethics and Innovation. It is a useful read alongside this guidance.

Skills

Competence with digital technology is a prerequisite for all internal auditors – not just traditional IT auditors. That is not to say you need to be a data engineer, but you do need to know when you need their expertise and how to engage with them and understand what they are telling you.

Understanding roles such as data scientists, data engineers, architects and programmers is important as they are responsible for strategic and operational activities to implement and manage AI; data process modelling, programming, data warehousing, the deployment of machine learning tool kits, cloud computing, cloud storage, application software testing, installation and commissioning of commercial off-the-shelf software and physical AI robots.

Regulation and Legislation

There is currently an absence of regulations, standards or mandates. This is typical of fast paced/early adoption technology and the establishment of regulatory and compliance frameworks by industry and governments.

Internal auditors should maintain awareness of developments in this space.

The UK government has set up an AI Standards Hub which is a useful portal for internal auditors as it contains everything that is currently available on the topic. The Office of Artificial Intelligence is part of the Department for Science, Innovation and Technology (previously part of the Department of Business, Energy and Industrial Strategy (BEIS).

In 2022 the UK government published its draft AI Policy 'Establishing a pro-innovation approach to regulating AI: Policy Statement', outlining their approach to regulating AI in the UK:

- pro-innovation and risk-based

- proportionate and adaptable

- cross-sectoral principles, devolved to multiple regulators not a new central regulator

- Ensure that AI is used safely

- Ensure that AI is technically secure and functions as designed

- Make sure that AI is appropriately transparent and explainable

- Embed considerations of fairness into AI

- Define legal persons’ responsibility for AI governance

- Clarify routes to redress or contestability

In December 2022 the EU progressed negotiations with the European Parliament having reached member agreement on the proposed AI Act. A risk-based approach with three risk categories. AI applications and systems that create an unacceptable risk, such as government-run social scoring of the type used in China, are banned. Second, high-risk applications, such as a CV-scanning tool that ranks job applicants, are subject to specific legal requirements. Lastly, applications not explicitly banned or listed as high-risk are largely left unregulated.

Governance

The definition of AI is not fixed and there is no global agreement on a common definition or taxonomy on which a set of good practices can be based.

In the absence of external requirements, an organisation is accountable for its own governance. This is an area in which internal audit can be involved and add value. Questions to consider in respect of strategy and governance include:

- what is the strategy/framework for the adoption of AI technology

- how does AI benefit organisational objectives

- what governance framework in place to ensure adequate control and oversight.

The risks associated at this level are not dissimilar to those of an IT strategy in terms of misalignment with business need, poor tactical execution and lack of accountability. Our guidance on data governance is useful reference.

Audit frameworks

Existing and recently introduced frameworks are available to help internal auditors provide AI assurance.

- Global IIA – Artificial Intelligence Auditing Framework

Six elements |governance, data architecture and infrastructure, data quality, measuring performance, human factor and the black box factor. These are detailed in a three-part paper, ‘considerations for the profession’:

Part 1| the basics, opportunity and risk, framework overview

Part 2| governance, data architecture and infrastructure, data quality

Part 3| measuring performance, human factor and the black box factor

2. COBIT (Control Objectives for Information and Related Technologies)

Within the COBIT® 2019 framework, the suggested governance and management objectives relevant to a risk and controls review of AI are as follows:

| Select COBIT 2019 Governance and Management Objectives Relevant to AI Risk and Controls Review | |

|

EDM01 – Ensured Governance Framework Setting and Maintenance |

EDM01.02 Direct the governance system |

|

APO02 Managed Strategy

|

APO02.03 Define target digital capabilities |

|

APO04 Managed Innovation |

APO04.04 Assess the potential of emerging technologies and innovative ideas |

At a more in-depth level, the COBIT 2019 DSS (Deliver, Service and Support) domain has DSS06 Managed Business Process Controls, within which management practice DSS06.05 “Ensure traceability and accountability for information events” could be used to ensure AI activity audit trails provide sufficient information to understand the rationale behind every AI decision made within the organisation.

- US Government Accountability Office AI Framework

Designed for management but readily adaptable for internal auditors, the framework focuses on four elements – governance, data, performance, monitoring. Each section includes questions which be useful for assurance engagements. Whilst US focussed the principles are relevant in the UK and Ireland.

- Singapore Personal Data Protection Commission Model AI Governance Framework

Produced in conjunction with the World Economic Forum, this framework is designed to be practical in supporting managements implementation of AI from governance, human factors in augmented decision-making, operational management and communications. The companion self-assessment guide is a great tool for all internal auditors to adapt for assurance purposes.

- Information Commissions Office UK

The UK’s ICO issued guidelines that serve as a baseline for internal auditors reviewing AI applications, which take into consideration data protection principles according to the UK’s Data Protection Act and the EU General Data Protection Regulation (GDPR):

- Accountability and governance in AI, including Data Protection Impact Assessments (DPIAs) | Completing a DPIA is legally required if organisations use AI systems that process personal data. DPIAs offer an opportunity to consider how and why organisations are using AI systems to process personal data and what the potential risk could be. Additionally, depending on how they are designed and deployed, AI systems will inevitably involve making trade-offs between privacy and other competing rights and interests. It is the job and duty of the internal auditors to understand what these trade-offs may be and how organisations can manage them.

- Fair, lawful and transparent processing | As AI systems process personal data in various stages for a variety of purposes, there is a risk that if organisations fail to appropriately distinguish each distinct processing operation and identify an appropriate lawful basis for it, it could lead to a failure to comply with the data protection principle of lawfulness. Internal auditors must identify these purposes and have an appropriate lawful basis to comply with the principle of lawfulness.

- Data minimisation and security | Internal auditors need to ensure that personal data is processed in a manner that guarantees appropriate levels of security against its unauthorized or unlawful processing, accidental loss, destruction or damage. They also need to verify that all movements and storing of personal data from one location to another are recorded and documented. This will help to monitor the effectiveness of appropriate security risk controls.

- The exercising of individual rights in AI systems, including rights related to automated decision-making | Under data protection law and regulations such as GDPR, individuals have rights relating to their personal data. Within the scope of AI, these rights apply wherever personal data is used at any of the various points in the development and deployment life cycle of an AI system. Internal auditors need to ensure that individual rights of information, access, rectification, erasure, and to restriction of processing, data portability, object (rights referred to in Articles 13-21of the GDPR) are considered when developing and deploying AI.

Data

Following on from the earlier section referencing the ICO - data quality is critical - AI uses and, in many cases, learns from the data available to it. This is a readily auditable area for internal audit as the principles of data quality are consistent across media and use. One additional consideration that is crucial for AI data quality is that of bias. For example, if an organisation has a cultural bias towards recruiting women of a certain age for a particular role then an AI programme to sift cv’s will likely continue this due to the data available to learn from and unconscious bias hard coded into the programming.

Speed of innovation

The emergent nature of AI technology and wide variance among AI systems and solutions makes this a complex area to audit. There is often uncertainty around the scope of AI within an organisation due to the ease of access to end-user applications/cloud services which are outside the scope of the IT function.

At this evolutionary stage internal audit can be influential in challenging whether existing ways of working are fit for purpose, raising risk awareness and driving the governance agenda. Most of all internal audit must stay informed and alert to emerging AI risk.

Third parties

Complexity of and shortage of in-house expertise may lead an organisation to outsource AI development projects. This exposes an organisation to additional risks which internal audit should include in assurance engagements. It also introduces the risk of in-house teams becoming outdated and a dispersal of understanding of enterprise AI across (potentially) numerous providers.

There is considerable diversity in the digital journey, and AI adoption, across organisations, industries and sectors. Consequently, digital maturity and expectations of internal auditors will also vary. Chief audit executives should ensure that their internal audit function as a minimum keeps pace with their organisation and sector. Ideally, internal audit should be ahead of the curve, taking advantage of technology to improve efficiency and effectiveness alongside building risk awareness and credibility.

AI tools to write reports, prepare information and perform data analysis are available for every budget, including open access (free to use).

Conclusion

Although there are significant challenges posed by gaining an understanding of AI technology, internal auditors should take care to not think of AI as a technical issue. There are ethical, human, strategic and operational issues too. With evolving regulation and auditing frameworks, internal auditors can confidently provide assurance on many aspects, including governance alongside upskilling, working with subject matter experts and coming to terms with auditing novel and emerging risk.